You don't implement amortized analysis. It's a technique to get more accurate O bounds.

The essential observation you have to make is, that expensive operations cannot happen at any time.

In the case of an array-backed data structure, the array needs resizing every now and then – when it's full. This is the most expensive operation and takes O(n) time. All other inserts into the array are O(1).

To determine the runtime for inserting n items, you can multiply n with the most expensive operation O(n), which results in an overall runtime behavior of O(n^2).

However, this is inaccurate because resizing cannot happen very often.

When talking about money, you amortize cost, when you pay off your debt with multiple small payments over time.

We can use this model to think about algorithms as well. We simply substitute "time" with "money" to avoid mental mapping.

Once the array is fulled to it's length n, we can double it's size. We need to make the following operations:

- Allocate

2n chunks of memory

- copy

n items

If we assume that both allocating memory and copying happen in linear time, this is going to be a very expensive operation. However, we can now use the idea of debt and amortizing it for our analysis. Only, we are going to amortize our debt before we actually make it.

Let's assume, that our balance (of money/time) is back to 0 (i.e. we don't have a debt nor do we have any leftovers) once we have resized the array.

This has the following implication:

- Inserting the next

n items will cover the cost of resizing and copying (we have n used slots, and n unused slots`)

We can now think about how much every insert operation needs to pay for:

- The insert

- The cost of allocating one chunk of memory

- the cost of moving it to the newly allocated memory

We have now covered the costs to allocate memory, copy and insert the next n elements. However, we have yet neglected allocating space for the old n elements as well as copying them.

We simply distribute the costs of our old n elements to our new (yet to be inserted) n elements:

- The cost of allocating one chunk of memory

- the cost of moving it to the newly allocated memory

In total, every, every insert operation will cost 5 units. This pays for its own insertion, and the moving and allocating of space for itself and one of the old elements.

Every insert-operation still takes constant amount of time, but the resizing happens for free: We amortized it by spending "more" time on each insertion.

As a result, inserting n elements takes O(n) time.

Other techniques for amortized analysis are explained here.

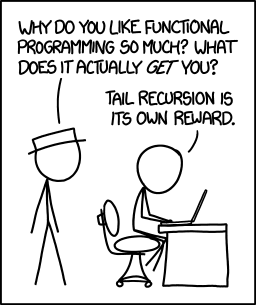

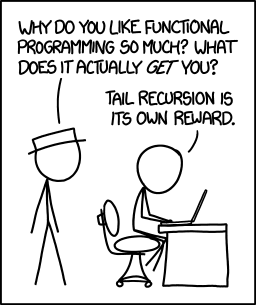

Tail call optimization is present in many languages and compilers. In this situation, the compiler recognizes a function of the form:

int foo(n) {

...

return bar(n);

}

Here, the language is able to recognize that the result being returned is the result from another function and change a function call with a new stack frame into a jump.

Realize that the classic factorial method:

int factorial(n) {

if(n == 0) return 1;

if(n == 1) return 1;

return n * factorial(n - 1);

}

is not tail call optimizatable because of the inspection necessary on the return. (Example source code and compiled output)

To make this tail call optimizeable,

int _fact(int n, int acc) {

if(n == 1) return acc;

return _fact(n - 1, acc * n);

}

int factorial(int n) {

if(n == 0) return 1;

return _fact(n, 1);

}

Compiling this code with gcc -O2 -S fact.c (the -O2 is necessary to enable the optimization in the compiler, but with more optimizations of -O3 it gets hard for a human to read...)

_fact(int, int):

cmpl $1, %edi

movl %esi, %eax

je .L2

.L3:

imull %edi, %eax

subl $1, %edi

cmpl $1, %edi

jne .L3

.L2:

rep ret

(Example source code and compiled output)

One can see in segment .L3, the jne rather than a call (which does a subroutine call with a new stack frame).

Please note this was done with C. Tail call optimization in Java is hard and depends on the JVM implementation (that said, I haven't seen any that do it, because it is hard and implications of the required Java security model requiring stack frames - which is what TCO avoids) -- tail-recursion + java and tail-recursion + optimization are good tag sets to browse. You may find other JVM languages are able to optimize tail recursion better (try clojure (which requires the recur to tail call optimize), or scala).

That said,

There is a certain joy in knowing that you wrote something right - in the ideal way that it can be done.

And now, I'm going to get some scotch and put on some German electronica...

To the general question of "methods to avoid a stack overflow in a recursive algorithm"...

Another approach is to include a recursion counter. This is more for detecting infinite loops caused by situations beyond one's control (and poor coding).

The recursion counter takes the form of

int foo(arg, counter) {

if(counter > RECURSION_MAX) { return -1; }

...

return foo(arg, counter + 1);

}

Each time you make a call, you increment the counter. If the counter gets too big, you error out (in here, just a return of -1, though in other languages you may prefer to throw an exception). The idea is to prevent worse things from happening (out of memory errors) when doing a recursion that is much deeper than expected and likely an infinite loop.

In theory, you shouldn't need this. In practice, I've seen poorly written code that has hit this because of a plethora of small errors and bad coding practices (multithreaded concurrency issues where something changes something outside the method that makes another thread go into an infinite loop of recursive calls).

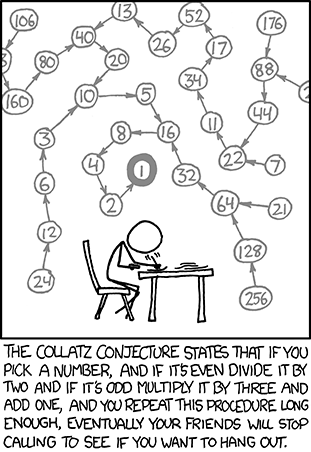

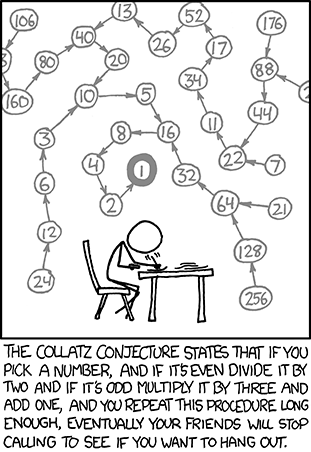

Use the right algorithm and solve the right problem. Specifically for the Collatz Conjecture, it appears that you are trying to solve it in the xkcd way:

You are starting at a number and doing a tree traversal. This rapidly leads to a very large search space. A quick run to calculate the number of iterations for the correct answer results in about 500 steps. This shouldn't be an issue for recursion with a small stack frame.

While knowing the recursive solution is not a bad thing, one should also realize that many times the iterative solution is better. A number of ways of approaching converting a recursive algorithm to an iterative one can be seen on Stack Overflow at Way to go from recursion to iteration.

Best Answer

The issue isn't on the input (obviously, with unbounded input, you can have unbounded running time just to read the input), it is on the number of internal states.

When the number of internal state is bounded, theoretically you can solve the halting problem in all cases (just emulate it until you reach halting or the repetition of a state), when it isn't, there are cases where it isn't solvable. But even if the number of internal states is in practice bounded, it is also so huge that the methods relying of the boundedness of the number of internal states are useless to prove the termination of any but the most trivial programs.

There are more practical ways to check the termination of programs. For instance, express them in a programming language which hasn't recursion nor goto and whose looping structures have all a bound on the number of iterations which has to be specified on entry of the loop. (Note that the bound hasn't to be really related to the effective number of iterations, a standard way to prove the termination of a loop is to have a function which you prove is strictly decreasing from one iteration to the other and your entry condition ensure is positive, you could put the first evaluation as your bound).